Imagine navigating a bustling market abroad or collaborating with international colleagues—the live translation tool could eliminate the awkward pauses that come with language barriers. Based on beta findings, the feature supports key languages like English, French, German, Portuguese, and Spanish, drawing from the same pool as iOS 26’s other translation options. It leverages Apple Intelligence, the company’s suite of on-device AI tools, to handle translations quickly and privately, without relying on cloud servers for every query.

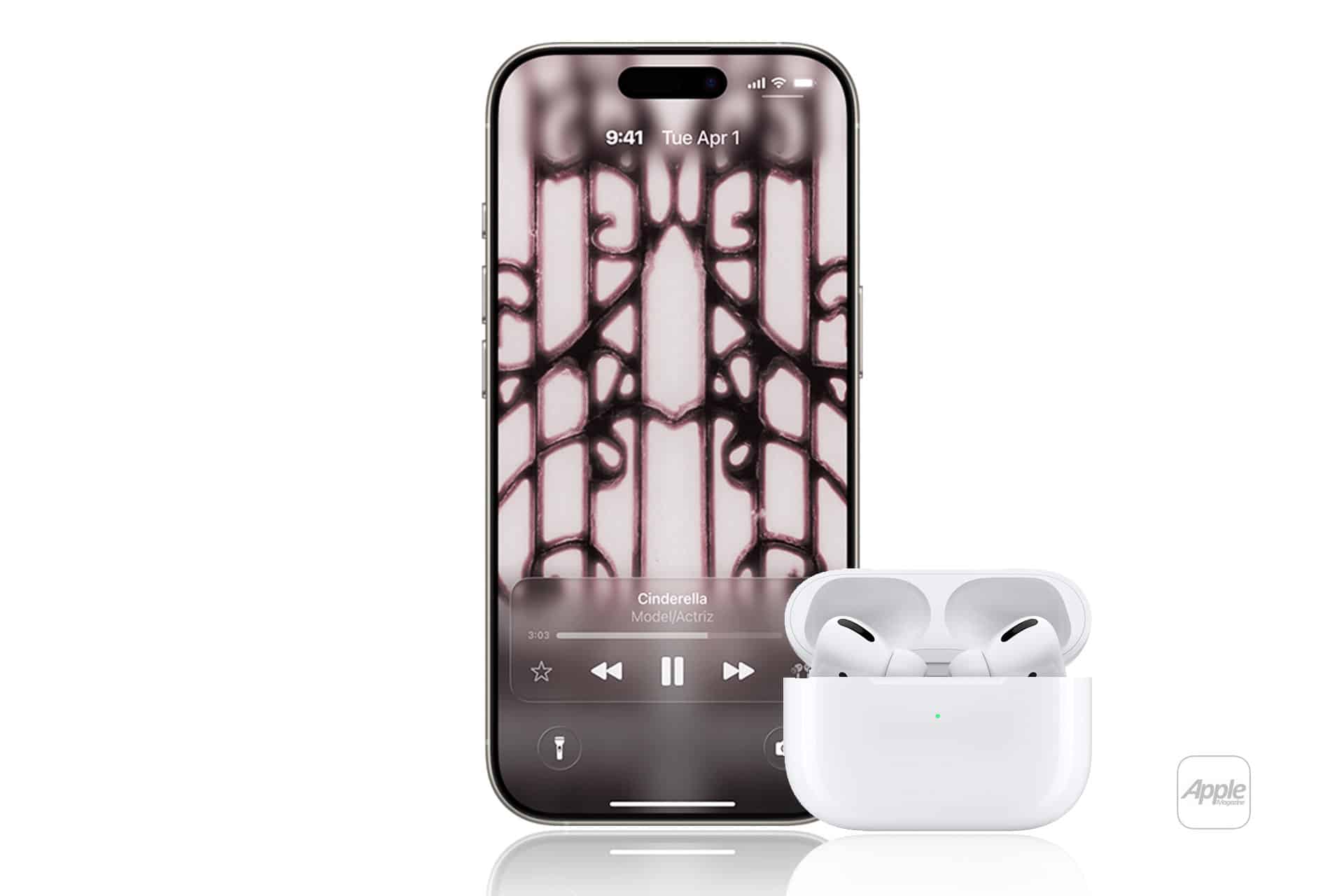

For users, this means faster responses and better accuracy in noisy environments, thanks to AirPods’ noise-canceling microphones. The double-press gesture keeps things intuitive, similar to how users already summon Siri or switch audio modes. According to details uncovered in the beta, the feature appears tailored for AirPods Pro 2 and AirPods 4, models equipped with the necessary hardware for precise audio capture and playback. This selectivity ensures optimal performance, as older models might lack the processing power or mic sensitivity required.

In practice, the tool could shine in scenarios like ordering food in a foreign city or discussing details with a non-native speaker at work. It extends the conversation boost feature already in AirPods, which amplifies nearby voices, by adding intelligent language conversion. Users won’t need to hold their iPhone awkwardly; the earbuds handle incoming audio discreetly, while the phone broadcasts replies. This setup promotes natural interactions, focusing on the person rather than the device.

Apple’s approach here prioritizes reliability over flashy gimmicks, grounding the feature in verified tech that’s been refined through betas. It also reflects a commitment to innovation that serves real needs, like fostering global connections in an increasingly interconnected world.

Building on iOS 26’s Translation Foundation

iOS 26 already introduces live translation across several core apps, setting the stage for this AirPods expansion. In Messages, FaceTime, and Phone, users can translate text and audio in real time, with captions appearing on screen for clarity. These tools, powered by Apple Intelligence, support one-on-one calls and chats in the same languages, ensuring consistency across the ecosystem.

Extending this to AirPods makes sense as a natural progression, turning the earbuds into an always-on assistant for multilingual environments. Apple’s official announcements highlight how these features help users communicate effortlessly, translating spoken words during calls or displaying captions in video chats. The AirPods version fills a gap for in-person use, where pulling out a phone mid-conversation might disrupt the flow.

This integration underscores Apple’s strategy of layering intelligent capabilities onto familiar hardware. By updating software rather than requiring new purchases, the company keeps costs down for users while encouraging loyalty. Reports from earlier development stages suggest the feature has been in the pipeline since plans for iOS updates focused on AI enhancements. Now, with beta imagery confirming its arrival, it positions AirPods as more than just audio devices—they become tools for breaking down communication walls.

The emphasis on privacy remains key; translations process on-device where possible, aligning with Apple’s core values of data security. This contrasts with some competitors’ cloud-dependent systems, offering users peace of mind in sensitive discussions.

Practical Impacts and User Benefits

For everyday users, this live translation could redefine how AirPods fit into routines. Travelers might rely on it for quick exchanges at airports or hotels, where understanding directions or menus is crucial. Business professionals could use it in meetings with global teams, ensuring no details get lost in translation. Even in diverse communities at home, it fosters inclusivity by making casual chats accessible.

The feature’s tie to Apple Intelligence means it requires a compatible iPhone, likely models from the iPhone 15 Pro onward, to handle the AI computations. This ensures smooth operation but might limit access for those with older devices. Still, for eligible users, it adds tangible value, enhancing AirPods’ role in health, entertainment, and now communication.

Apple’s track record with audio features, like adaptive noise control and spatial audio, shows a pattern of iterative improvements that prioritize user experience. This translation tool continues that trend, focusing on accuracy and ease. While initial languages are limited, expansions could follow based on user demand and tech advancements.

In a world where mobility and connectivity drive innovation, this update holds promise for making interactions more fluid. It rewards users who invest in Apple’s ecosystem, delivering features that evolve with software updates.

Evolution of Apple’s Language Tools

Apple’s journey with translation tech traces back to Siri’s early days, where basic phrase conversions laid the groundwork. Over time, the Translate app debuted in 2020, offering offline support and conversation modes. iOS 26 elevates this with AI-driven live translations, making them proactive and context-aware.

The AirPods addition represents a milestone, blending hardware and software for immersive use. Unlike standalone apps, it embeds translation into the audio pipeline, using beamforming mics to isolate voices. This tech, refined in features like Conversation Awareness, ensures translations cut through background noise.

Comparatively, rivals like Google’s Pixel Buds have offered similar tools since 2017, but Apple’s version emphasizes integration and privacy. By processing locally, it reduces latency and data exposure, appealing to users wary of cloud services. The result is a tool that’s not just functional but thoughtfully designed for real-world reliability.

For developers and enthusiasts, this opens doors to custom apps leveraging the feature, perhaps in education or customer service. Apple’s focus on accountability in AI ensures translations are accurate, with ongoing refinements through betas.

Looking at Broader Implications

This feature signals Apple’s intent to make AirPods a cornerstone of personal tech, beyond music and calls. It aligns with trends in wearable intelligence, where devices anticipate needs like language support. For users, it means fewer barriers in a globalized society, promoting fairness in communication.

Innovation like this drives accountability, as Apple refines based on feedback to meet high standards. It also boosts device longevity, with software updates keeping hardware relevant. As part of iOS 26’s lineup, including redesigned interfaces and enhanced apps, it contributes to a cohesive experience that values practicality.

Ultimately, live translation via AirPods could become a staple for users seeking seamless connectivity. It exemplifies how tech can enhance human interactions, grounded in verified advancements that prioritize user needs.