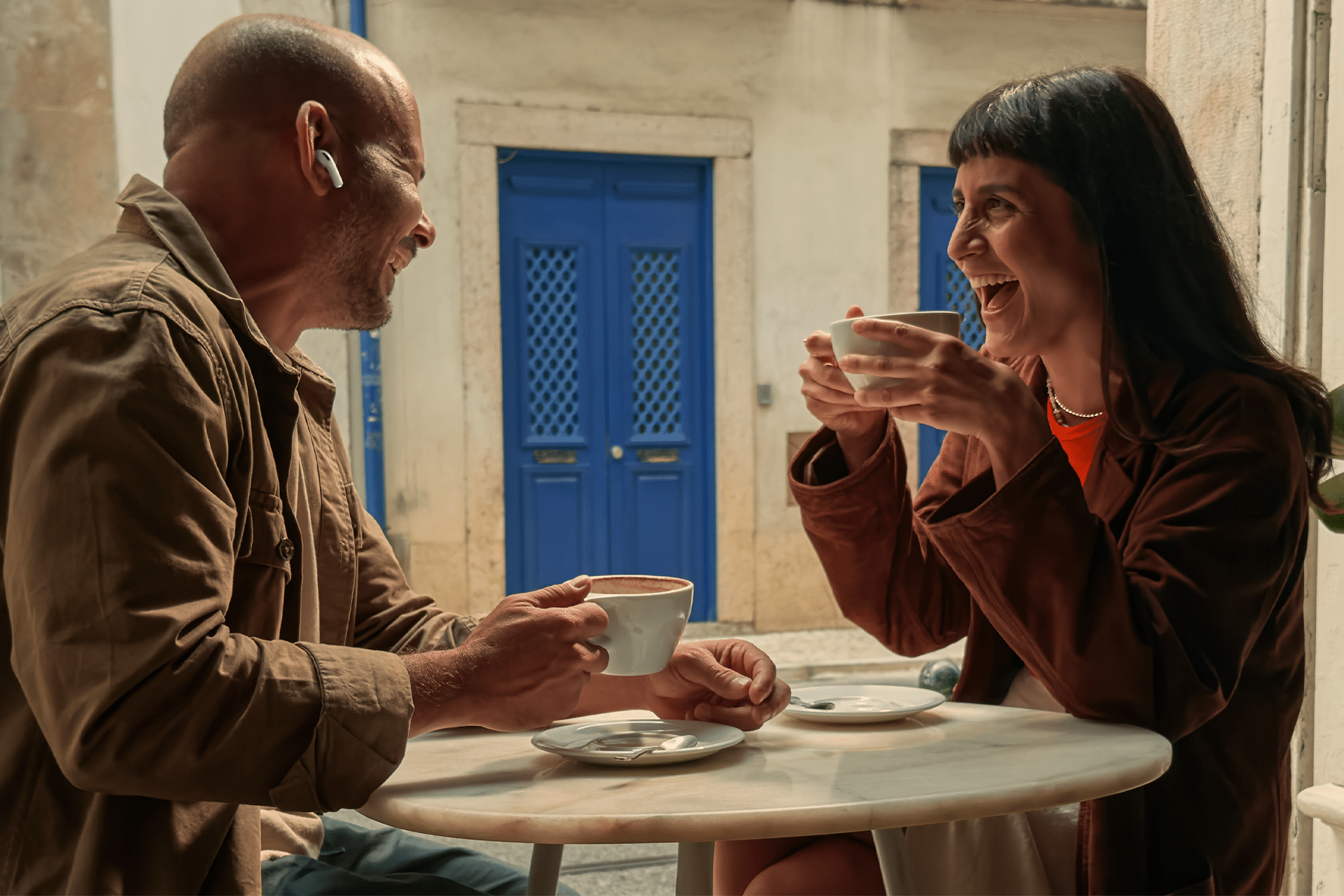

Apple is preparing to introduce a simultaneous translation feature for AirPods, starting with a rollout in Italy before expanding to other regions. The feature will allow users to hear real-time translations directly through their AirPods, while text captions appear on their iPhone, iPad, or Vision Pro screens.

According to Italian technology outlet Il Post, Italy will be the first market where Apple tests this advanced translation system. The initiative reflects Apple’s broader effort to make AirPods a central element of its AI and communication ecosystem, combining speech recognition, translation, and contextual intelligence in one experience.

The new capability builds on the live translation features first introduced in iOS 18 and expanded through Apple Intelligence. By integrating both on-device processing and Apple’s Private Cloud Compute, AirPods will be able to translate conversations between multiple languages nearly instantly — a significant step toward natural, multilingual communication.

How the Technology Works

The translation process relies on Apple’s Neural Engine and optimized language models. When a user speaks, the AirPods capture the audio, transmit it to the connected device for analysis, and return the translated speech to the listener within a fraction of a second.

In addition to spoken translation, users will see real-time captions displayed on their Apple devices in both original and translated languages. This ensures accuracy and accessibility, particularly useful for travelers or bilingual households.

Apple has designed the system to handle both one-on-one conversations and larger group settings, automatically detecting the active language and adjusting responses accordingly. The software framework found in recent iOS beta builds includes Italian as the primary supported language for the feature’s initial testing phase.

Privacy and Accessibility at the Core

As with other Apple Intelligence features, privacy remains central to Apple’s implementation. Most translation processes occur locally on the device, and only more complex requests — such as those requiring extended dialogue — are routed through Apple’s Private Cloud Compute. The data is processed anonymously and not stored, ensuring compliance with European privacy standards.

Apple’s focus on accessibility also underpins the translation rollout. The company has long positioned AirPods as assistive devices through features like Conversation Boost and Live Listen. The upcoming simultaneous translation system expands that accessibility framework to support inclusive communication between speakers of different languages.

Why Italy Is the Starting Point

Italy’s selection as the first market for AirPods simultaneous translation underscores the country’s importance as a test region for language technologies. Italian users have historically been among the earliest adopters of Apple’s translation tools, including the Translate app and multilingual Siri.

Launching in Italy allows Apple to refine accuracy, latency, and contextual understanding before deploying the system globally. It also aligns with regulatory readiness across the EU, where local data processing and language inclusivity are increasingly prioritized for AI-powered services.

While Apple has not officially announced the release date, sources close to the development suggest that the feature will arrive through a firmware update for AirPods Pro models equipped with the H2 chip, expected sometime in 2026. Broader device support, including standard AirPods and Beats models, may follow after further testing.

As competition among audio manufacturers intensifies, Apple’s approach differentiates itself by integrating translation directly into its hardware and operating systems rather than relying on third-party apps. This strategy ensures tighter performance control and a more natural user experience, particularly within the Apple ecosystem.

The introduction of simultaneous translation in Italy marks a step forward in Apple’s effort to turn AirPods into intelligent companions — tools that not only deliver sound but actively bridge communication across languages in real time.