Apple Intelligence is Apple’s core artificial intelligence initiative, designed to integrate AI capabilities deeply into iPhone, iPad, Mac, Apple Watch, and Apple Vision Pro while prioritizing user privacy and seamless system integration. In 2026, this framework powers real-time language tools, visual awareness, contextual automations, and fitness insights, and it lays the groundwork for future enhancements — including advanced voice assistant interaction. These developments reflect both Apple’s cautious approach to AI and its strategy for embedding practical intelligence into devices that users rely on every day.

Apple’s recent updates illustrate how Apple Intelligence enhances core interactions without compromising personal data. Features such as Live Translation enable real-time translation in Messages, FaceTime, and phone calls directly on device, helping overcome language barriers during conversations and travel while keeping speech data private.

Visual intelligence builds on on-screen content recognition, allowing users to take actions such as identifying objects, summarizing text, or creating calendar events from flyers or seen content with a single tap. These actions improve discoverability and usefulness across apps.

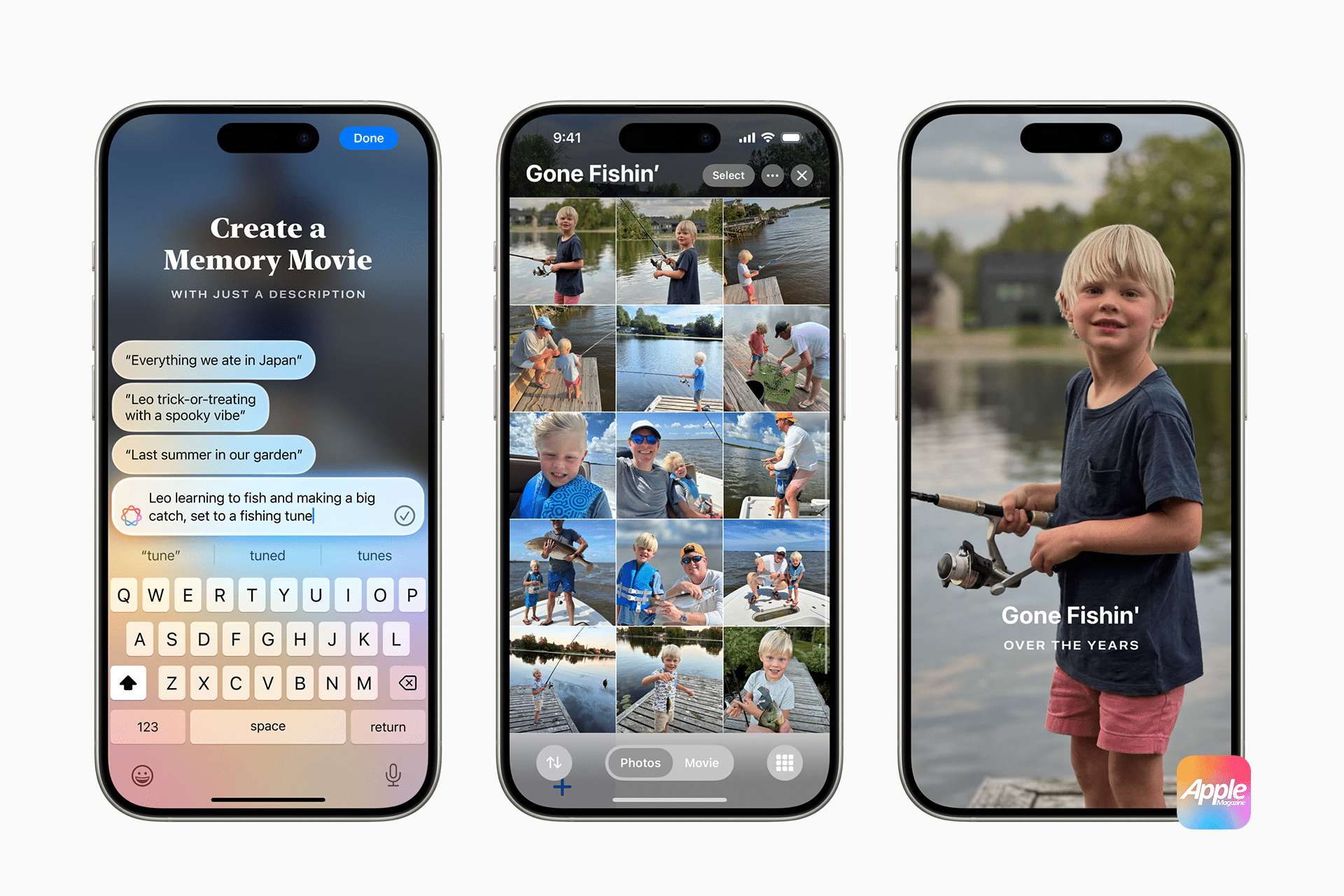

Support for intelligent automation is also expanding. Shortcuts can now tap into Apple Intelligence models — either on device or via Private Cloud Compute — to perform complex tasks such as summarizing documents, generating custom images, or comparing lecture transcripts to notes, with privacy preserved throughout.

Apple Intelligence Core Features Today

Apple Intelligence introduced significant features in 2025 that remain foundational in 2026. Live Translation breaks down language barriers with automatic translation while messaging or during calls, and visual intelligence lets users “search and take action” on content displayed on screen — from identifying objects and products to extracting event details for calendars.

Other integrated tools include Genmoji and Image Playground, allowing users to create unique images or emoji combinations with simple prompts, and intelligent actions in Shortcuts that let users build rich automation workflows powered by language models that operate locally or through Apple’s cloud optimization layer.

Future voice assistant enhancements — originally scheduled for earlier release — are expected later in 2026. Apple has publicly confirmed that next-generation Siri features that enable deeper context awareness and cross-app task handling will arrive this year as part of Apple Intelligence evolution.

Privacy and On-Device Intelligence

A key pillar of Apple Intelligence is user privacy. Many AI tasks run entirely on device using Apple Silicon, minimizing data exposure outside the user’s hardware. For more complex tasks requiring advanced models, Private Cloud Compute allows expanded capabilities while continuing to protect user data by processing it only for the task at hand and not storing it afterward.

This hybrid model upholds Apple’s longstanding privacy commitments while unlocking powerful intelligence across devices without external data sharing.

Developer Access and AI in Apps

Another strategic direction in 2026 is opening Apple Intelligence models to third-party developers. Through framework support that includes native Swift integration, developers can tap on-device foundational models to deliver intelligent features in their apps, from smart search to text understanding and automatic action suggestions based on context.

This broader ecosystem adoption empowers developers to build privacy-aware intelligent experiences that feel native and responsive, helping extend Apple Intelligence beyond Apple’s own software.

Forecast Scenarios for Apple Intelligence

Apple Intelligence in 2026 and beyond is poised to deepen its influence across the ecosystem:

Personalized assistance across devices: As advanced voice and context awareness arrive, Apple Intelligence may anticipate user needs based on ongoing patterns, suggesting useful actions before a direct request is made.

Expanded language and regional support: Continued expansion of supported languages and regions makes intelligent features accessible to more users worldwide, building on early multilingual support.

Developer-enabled innovation: As developers adopt on-device models, the App Store could see a new wave of apps that leverage AI for productivity, creativity, and personal workflows.

Proactive contextual automation: Tools may emerge that adapt actions based on context — such as summarizing key information from documents or organizing tasks based on user patterns — reducing repetitive steps and saving time.

Although some anticipated Siri capabilities are still in development, the foundation of Apple Intelligence already illustrates how a privacy-centered, deeply integrated AI ecosystem can enrich daily use — from language translation and visual context to fitness insights and automation. As Apple builds further on this foundation in 2026, users can expect more powerful, seamless, and personalized intelligent experiences across their devices.