From Accessibility Nutrition Labels on the App Store to a new Magnifier app for Mac, these tools leverage Apple’s silicon and on-device AI to enhance usability for millions. The updates, which also include Braille Access, Accessibility Reader, and improvements to Live Listen and Personal Voice, aim to help users interact with technology more intuitively, whether they’re blind, deaf, or have mobility challenges.

The move underscores Apple’s long-standing commitment to accessibility, a priority emphasized by CEO Tim Cook, who stated, “Making technology for everyone is part of our DNA.” For tech enthusiasts and casual users alike, these features promise to make iPhones, Macs, and Apple Vision Pro devices more practical and versatile, reinforcing Apple’s ecosystem as a leader in user-focused innovation.

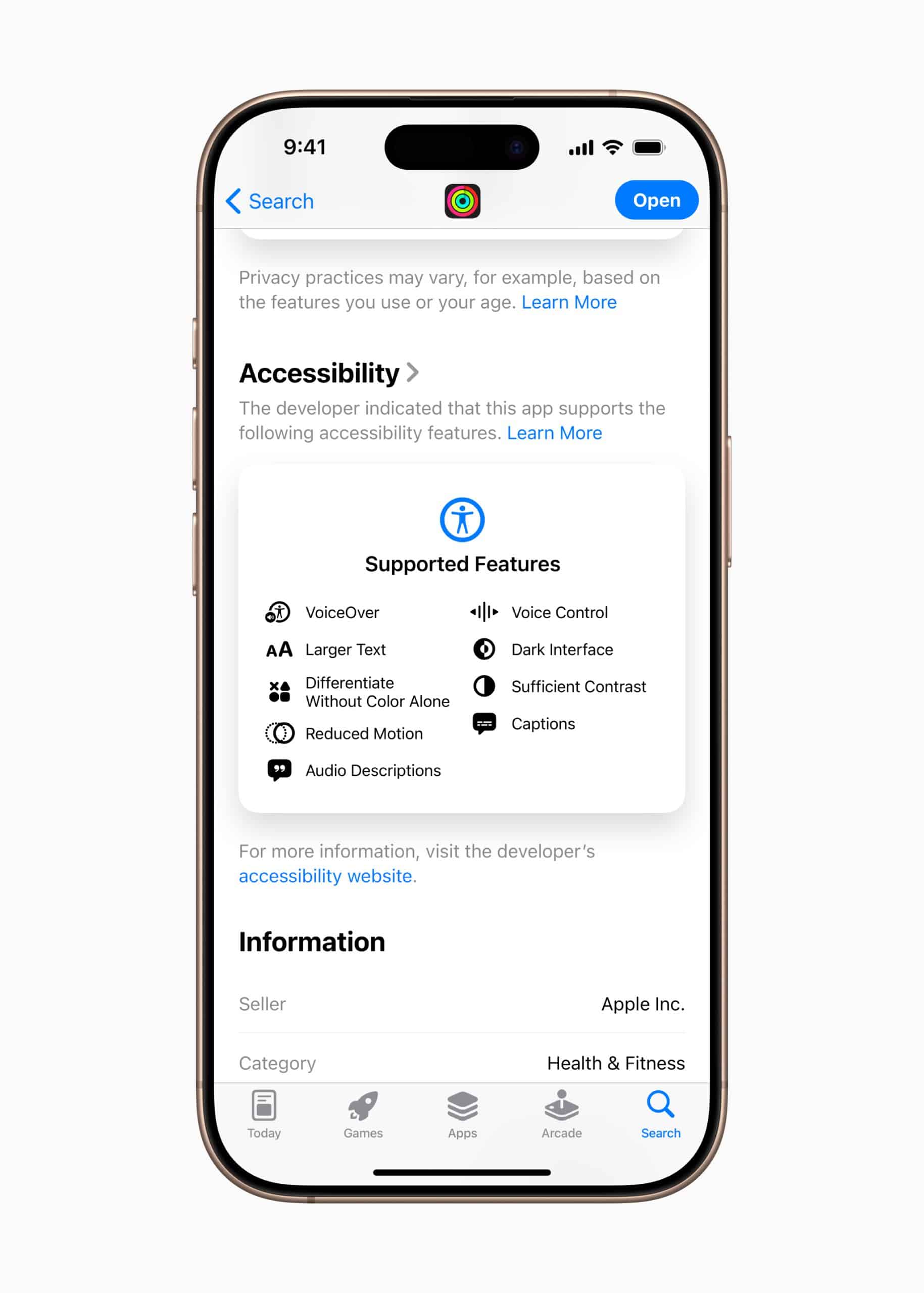

Accessibility Nutrition Labels

One standout feature is Accessibility Nutrition Labels, a new App Store addition that details an app’s accessibility capabilities before download. Labels will highlight support for features like VoiceOver, Larger Text, or Reduced Motion, helping users with disabilities choose apps that meet their needs. Developers can showcase their app’s accessibility credentials, fostering transparency. Eric Bridges, president of the American Foundation for the Blind, called the labels “a huge step forward,” noting they empower users to make informed decisions with confidence, per the press release.

This feature addresses a practical need: ensuring apps are usable from the start. For users who rely on VoiceOver or captions, the labels eliminate guesswork, saving time and frustration. It’s a win for developers too, as clear accessibility info could boost downloads among the growing community of accessibility-conscious consumers.

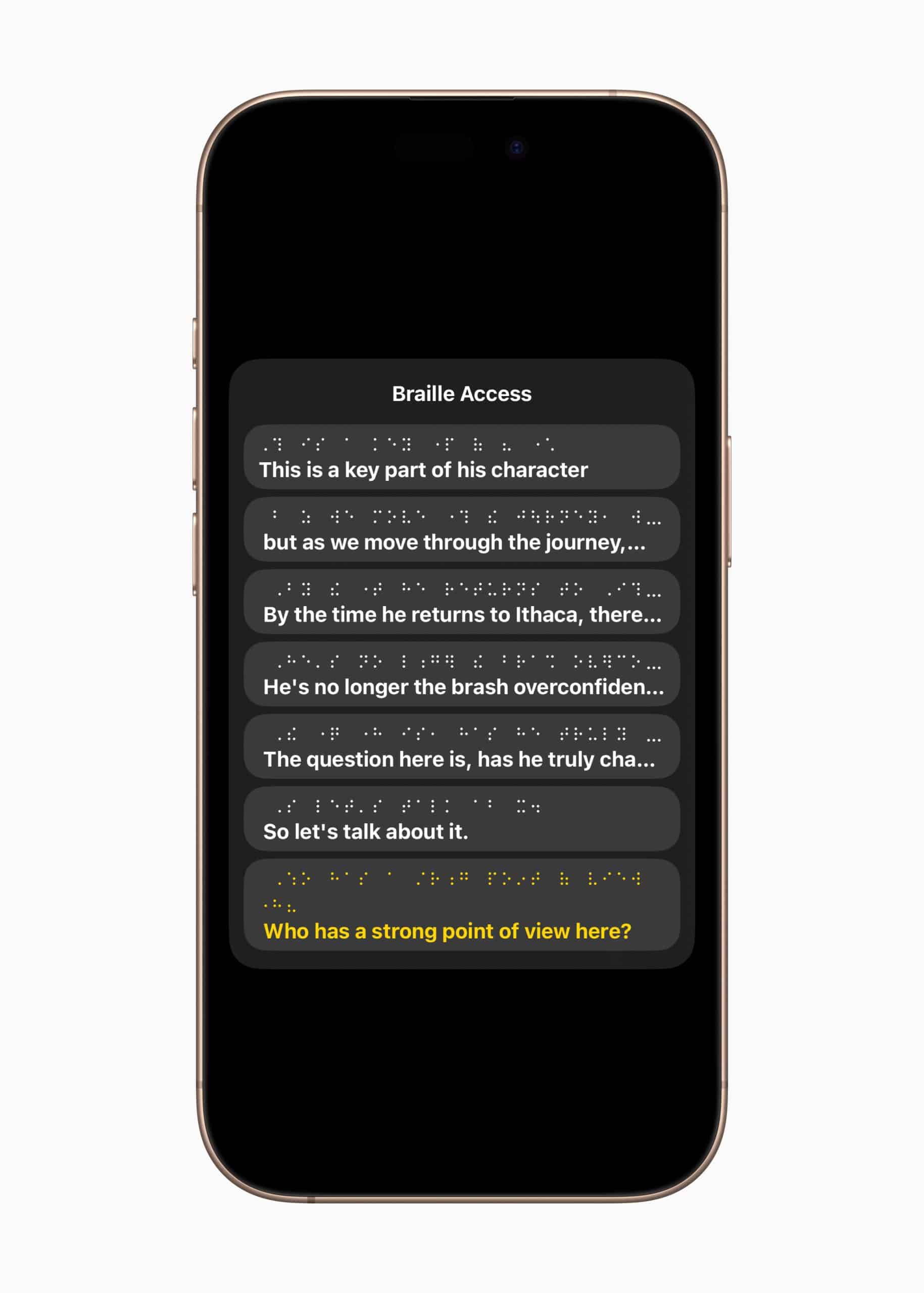

Magnifier for Mac and Braille Access

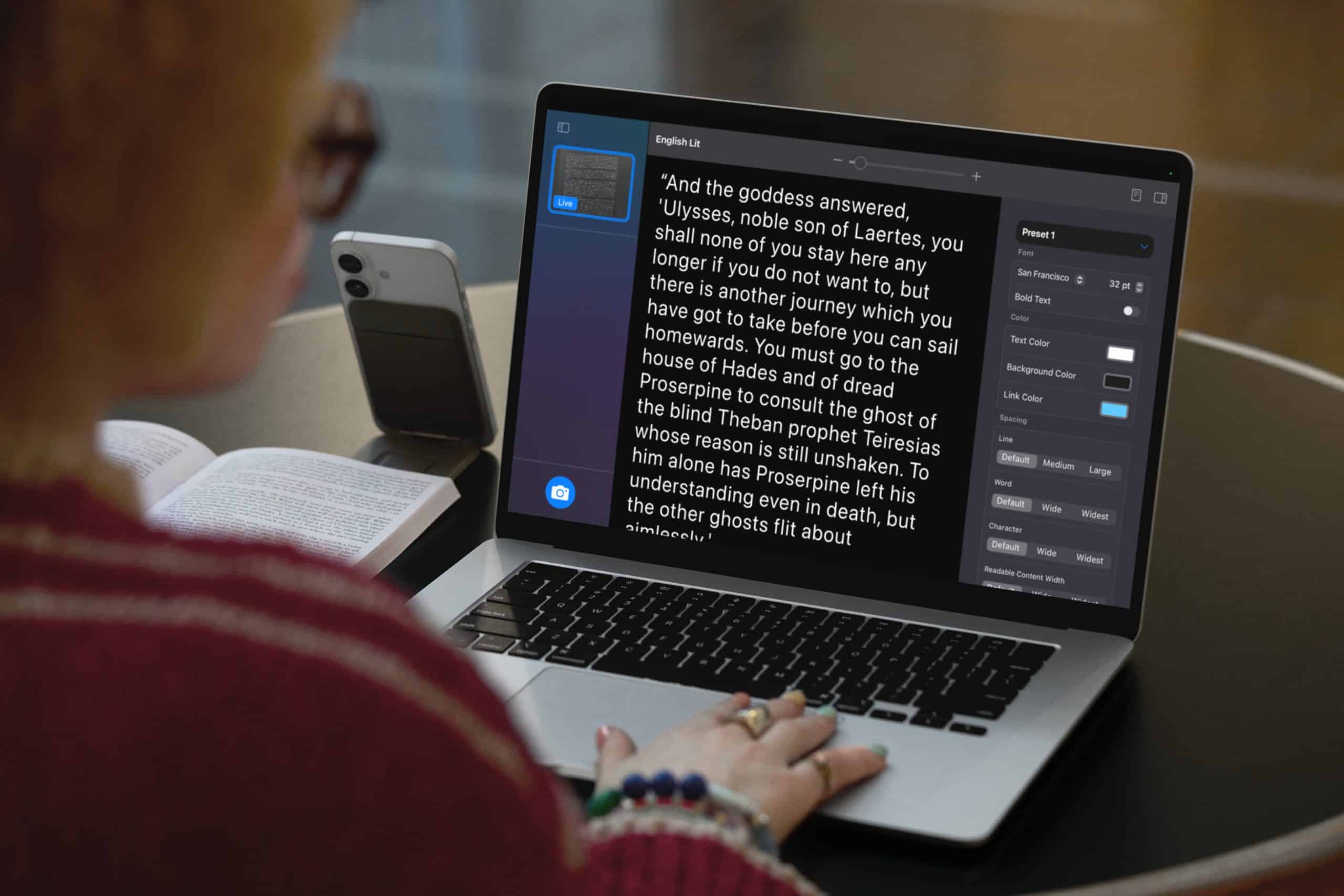

The Magnifier app, already popular on iPhone and iPad, is coming to Mac, enabling users with low vision to zoom in on physical objects like whiteboards or books using a camera. Integrated with Continuity Camera or USB webcams, it supports multitasking—users can view a presentation while reading a document in Desk View. Customizable settings like brightness and contrast make text clearer, and integration with the new Accessibility Reader transforms physical text into legible formats.

Braille Access turns Apple devices into powerful braille note-takers, supporting iPhone, iPad, Mac, and Apple Vision Pro. Users can take notes, perform calculations using Nemeth Braille, or open Braille Ready Format files, seamlessly integrating with braille displays. Real-time transcription via Live Captions further enhances communication for deaf or blind users. These tools make Apple devices more practical for students and professionals, offering accessible ways to engage with education and work.

Accessibility Reader and visionOS

Accessibility Reader, a systemwide feature, simplifies reading for users with dyslexia or low vision. Available on iPhone, iPad, Mac, and Apple Vision Pro, it offers customizable fonts, colors, and spacing, and integrates with Spoken Content for audio support. It’s built into Magnifier, allowing users to scan and read text from physical objects like menus or signs, making everyday tasks more manageable.

Apple Vision Pro’s visionOS also gets accessibility upgrades. Enhanced Zoom lets users magnify their surroundings, while Live Recognition uses AI to describe objects or read documents aloud for VoiceOver users. A new API allows apps like Be My Eyes to provide live visual assistance, helping blind users navigate hands-free. These updates make Apple’s mixed-reality platform more inclusive, broadening its appeal.

Live Listen and Personal Voice

Live Listen, which streams audio from an iPhone to AirPods or hearing aids, now supports Live Captions on Apple Watch, letting deaf users read real-time transcriptions. The Watch acts as a remote control, allowing users to manage sessions from a distance—a practical feature for meetings or classes. Personal Voice, for users at risk of losing speech, now creates natural-sounding voices faster, using just 10 phrases, and adds Spanish (Mexico) support. These updates make communication more accessible, ensuring users can stay connected.

Why It Matters

Apple’s accessibility push isn’t just about compliance—it’s about making technology work for everyone. By embedding features like Accessibility Reader and Braille Access into its ecosystem, Apple ensures users don’t need specialized devices to thrive. The use of on-device AI keeps these tools fast and private, a nod to user trust. For tech enthusiasts, these updates highlight Apple’s engineering prowess; for casual users, they mean easier, more inclusive interactions with devices they already own.

The rollout also signals a broader trend: accessibility as a competitive edge. As companies vie for loyalty, Apple’s focus on practical, user-centric features could solidify its lead in the tech race. These tools don’t just help users with disabilities—they make Apple’s ecosystem more versatile for all.

Set to launch later this year, these features will roll out across iOS, iPadOS, macOS, watchOS, and visionOS, with no specific release date confirmed, per the press release. Developers can already access guidelines for Accessibility Nutrition Labels, ensuring apps are ready. For users, the updates promise a more seamless experience, whether reading a menu, attending a lecture, or exploring mixed reality. As Apple continues to innovate, its accessibility tools are setting a high bar for what tech can achieve.