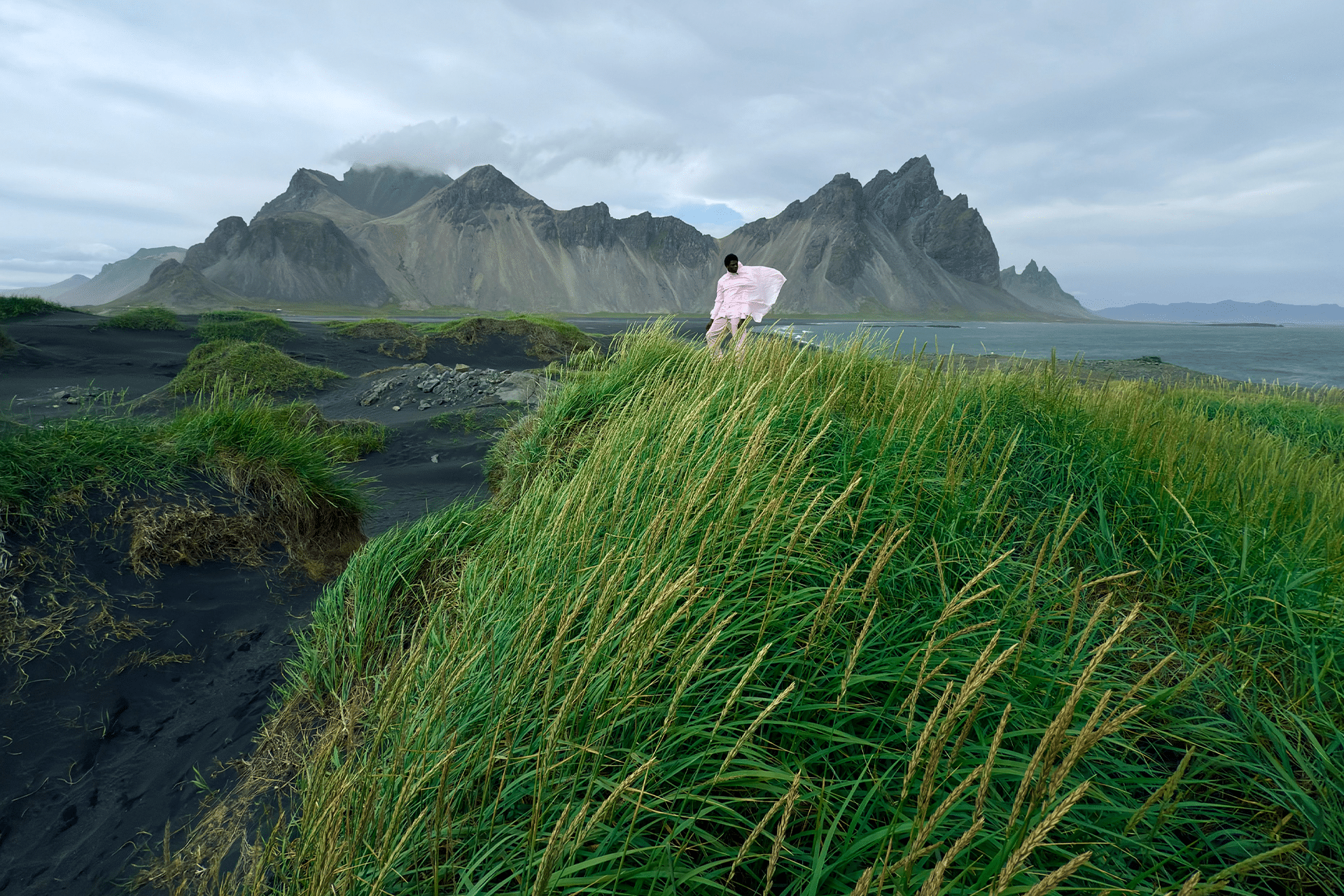

The new sensor, outlined in a patent titled “Image Sensor With Stacked Pixels Having High Dynamic Range And Low Noise,” introduces a sophisticated design that could achieve up to 20 stops of dynamic range. For context, the human eye can perceive roughly 20 to 30 stops, while most smartphone cameras today manage between 10 and 13 stops. This leap could allow iPhones to capture scenes with extreme lighting contrasts—like a person standing in front of a bright window—without losing detail in shadows or highlights.

A key feature of the sensor is the Lateral Overflow Integration Capacitor (LOFIC) system. This technology enables each pixel to store varying amounts of light based on the scene’s brightness, ensuring balanced exposure across diverse lighting conditions. Additionally, the sensor incorporates on-chip noise reduction, with each pixel equipped with a memory circuit that cancels out electronic noise in real time. This results in cleaner, sharper images, even in low-light settings, before any software processing is applied.

Why This Matters for Users

For iPhone users, this development could mean photos and videos that more closely mimic what they see with their own eyes. Imagine capturing a sunset where the vibrant hues of the sky and the subtle details of the landscape are preserved without compromise. This sensor could also enhance augmented reality (AR) applications, where precise light and depth detection are critical for seamless integration of digital and real-world elements. By prioritizing dynamic range and noise reduction, Apple aims to elevate everyday photography, making professional-grade results accessible to casual users.

Apple’s Strategic Shift

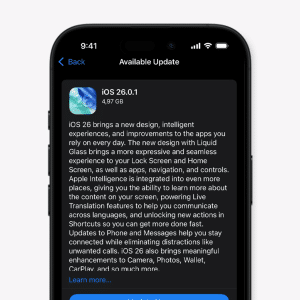

Developing an in-house image sensor marks a significant shift for Apple, which has historically relied on Sony for its camera sensors. This move aligns with the company’s broader strategy of vertical integration, as seen with its custom silicon like the A-series and M-series chips. By controlling the sensor design, Apple can optimize hardware and software integration, potentially improving power efficiency and computational photography features like Night Mode and Deep Fusion. This could also reduce costs over time, though the primary focus appears to be on performance rather than immediate cost savings.

Challenges and Timeline

Creating a custom image sensor is no small feat. The technology requires overcoming complex engineering hurdles, such as stacking silicon layers without compromising performance or increasing sensor size. While the patent, filed in July 2025, indicates active research, it’s not a guarantee of immediate implementation. Industry observers suggest that Apple’s sensor could debut in iPhones as early as 2026, potentially in the iPhone 18 series, though no official timeline has been confirmed. The company’s acquisition of InVisage Technologies in 2017, which specialized in advanced sensor materials, hints that this project may have been in development for years.

A Competitive Edge

Apple’s sensor could position the iPhone as a leader in mobile photography, surpassing competitors like Samsung and Google, whose Pixel series has been praised for computational photography but relies on third-party sensors. The proposed sensor’s dynamic range could even rival professional cinema cameras, such as the ARRI ALEXA 35, which is a benchmark in filmmaking. This advancement would not only appeal to photographers but also strengthen Apple’s ecosystem, enhancing features across devices like the iPad and Vision Pro.

Looking Ahead

While Apple’s plans are still in the research phase, the potential impact is clear: a camera sensor that captures the world with near-human fidelity could redefine smartphone photography. As the company continues to innovate, users can expect iPhones that push creative boundaries, making every snapshot a step closer to reality. This development underscores Apple’s commitment to blending cutting-edge technology with practical benefits, ensuring its devices remain at the forefront of the industry.