Anthropic’s new Claude Opus 4.5 advances its flagship family with capabilities designed for long-form reasoning, coding productivity and agentic workflows, addressing demands from developers and enterprises seeking both performance and cost-effectiveness. The model is now available via Anthropic’s API and supported cloud platforms, with pricing adjusted downward to open higher-tier capabilities to more users. The move reflects an intensifying race in large-language-model development as companies push not only new features but also tighter integration with workflows like spreadsheets, slide generation and autonomous agents.

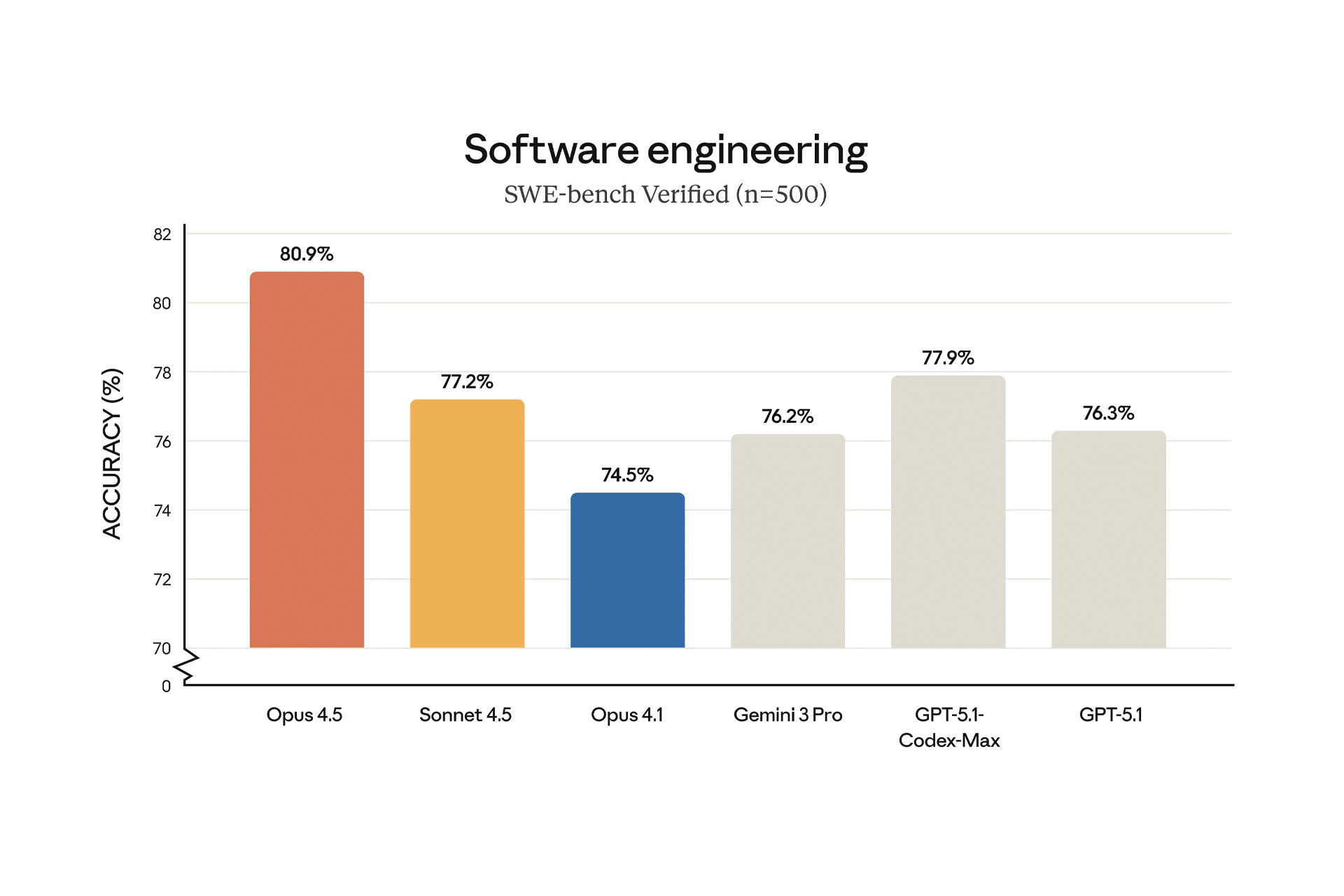

One of the headline improvements for Opus 4.5 is its performance on coding and multi-step reasoning tasks. Internal benchmarks from Anthropic report that the model surpasses the previous generation while using fewer tokens to reach solutions, a key metric for enterprises where token spend impacts operational cost. The model is explicitly tuned for tasks involving long-horizon reasoning, tool integration and autonomous agent workflows, reflecting a shift in large-language-model design from reactive chat toward active task-orchestration. Early customers say the model handled multi-file codebases, refactoring and multi-agent coordination with higher pass rates than older versions.

Expanded Functionality for Office and Agent Use

Beyond coding, Opus 4.5 is positioned to support workflows in spreadsheets, slide decks, and agents that persist across documents and sessions. This means the model is tuned not just to answer prompts but to manage multi-step projects: for example, summarizing large datasets, generating multi-slide presentations and executing agentic reasoning across tools. For enterprises managing complex document workflows, these enhancements may shift AI from a single-prompt assistant toward a continuous collaborator.

Anthropic also broadened platform availability, making Opus 4.5 accessible on major cloud services and via its API. Developers using the Claude platform can integrate the model into Excel, Chrome extensions or open-ended agents. The company highlights improved efficiency in token usage and cost structures, citing up to 50 percent reductions in token consumption for equivalent tasks compared to prior models. The pricing change reflects an attempt to accelerate adoption among teams requiring both high performance and cost discipline.

Strategic Implications in the LLM Market

The release of Claude Opus 4.5 signals Anthropic’s attempt to regain or assert leadership in high-end LLM use cases at a time when competitors are releasing new models rapidly. By delivering higher performance and lower cost simultaneously, the company aims to differentiate its offering for enterprise customers who care about efficiency, governance and long-context workflow support. The model’s focus on agents and coding also aligns with demand trends in software engineering and enterprise automation, rather than purely chat-bot conversational use.

At the same time, the release raises questions about how enterprises will value upgrades to models that deliver incremental gains. While coding benchmark improvements are tangible, the real-world impact will depend on how organizations integrate the new model into production pipelines and toolchains. Token cost reductions matter when scale is large, but developer time, integration efforts and workflow change continue to play a role in ROI calculations. Nevertheless, the model launch positions Anthropic as a contender in a space where cost-performance trade-offs are increasingly visible.