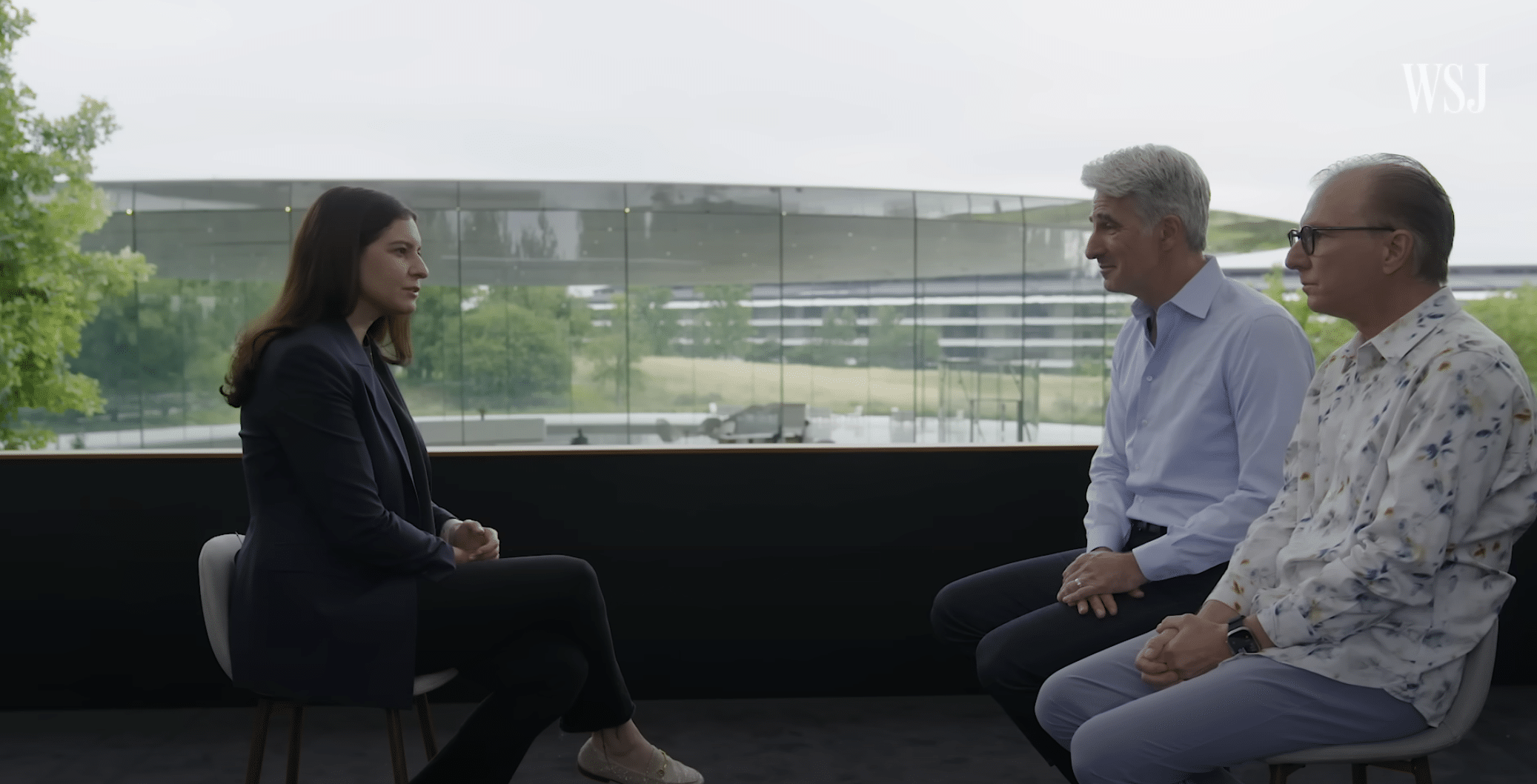

In a candid Wall Street Journal interview, Apple’s SVP of Software Engineering Craig Federighi and SVP of Worldwide Marketing Greg Joswiak pushed back, arguing Apple isn’t chasing the same finish line as competitors like OpenAI. Instead, Apple Intelligence focuses on seamless, on-device integration to enhance everyday tasks, not standalone chatbots. Here’s how Apple is carving its own path in AI and what it means for users.

A Different Kind of AI

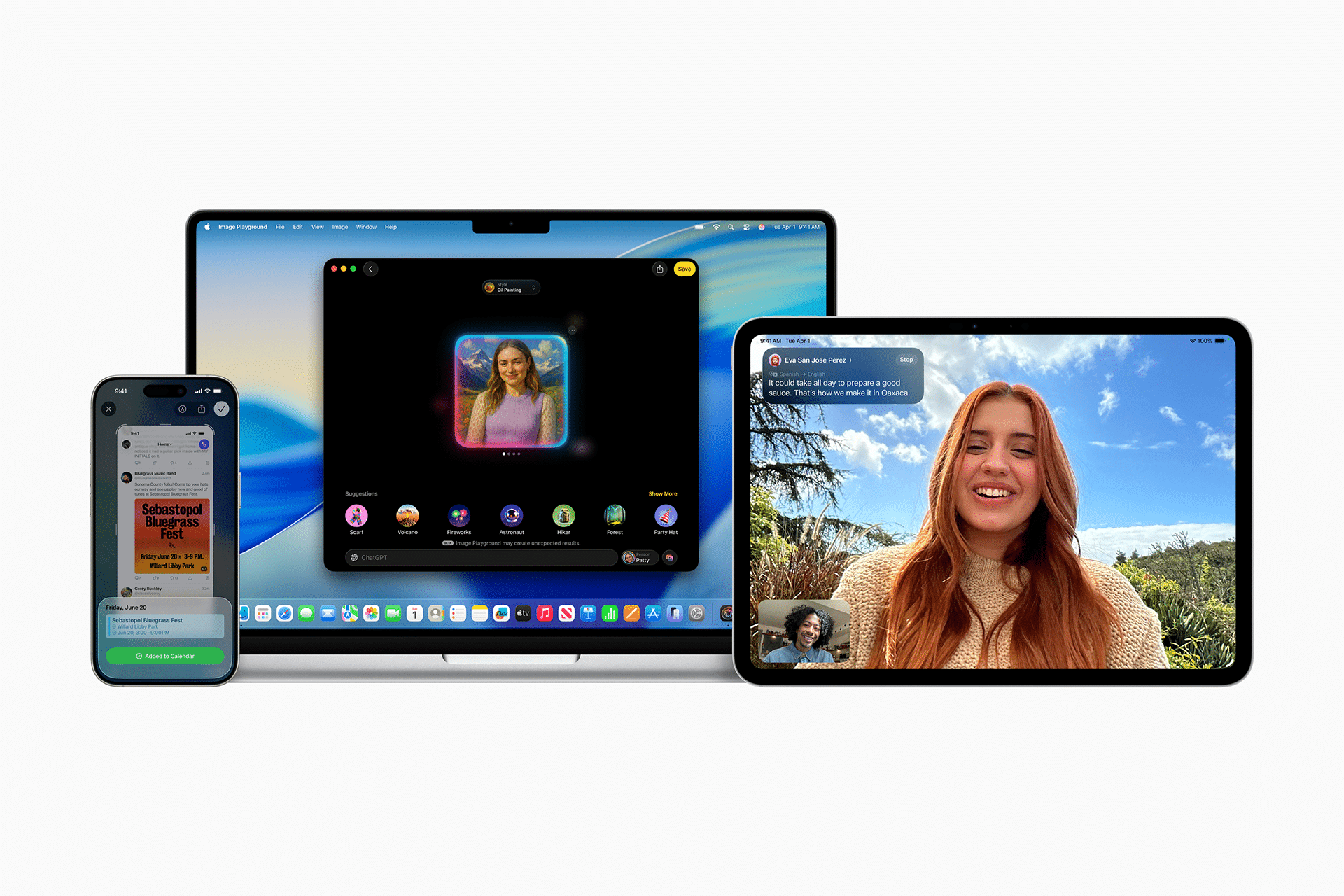

Apple Intelligence isn’t a flashy app or a rival to ChatGPT—it’s a framework woven into iOS 26, iPadOS 26, and beyond. Federighi emphasized that Apple prioritizes practical, privacy-focused features over building a “destination” AI. For example, Visual Intelligence analyzes your iPhone screen to suggest actions, like adding a calendar event from a screenshot, while live translation in Messages and FaceTime bridges language gaps on-device. Joswiak noted, per the Wall Street Journal, that users often leverage Apple Intelligence without realizing it, as it powers subtle enhancements across apps.

Unlike competitors’ cloud-heavy models, Apple’s AI runs locally on A14 Bionic chips and later, ensuring data stays private. This approach, confirmed by Apple’s WWDC keynote, contrasts with power-intensive models like those driving ChatGPT. Joswiak dismissed the idea of Apple needing its own chatbot, pointing to their integration of ChatGPT for users who want it, while keeping core features native.

Addressing the “Behind” Narrative

Critics, including some tech analysts, argue Apple trails in generative AI, citing Image Playground’s lackluster outputs or Siri’s occasional stumbles with complex queries. Federighi countered that Apple isn’t trying to compete in every tech arena—like Amazon’s e-commerce or YouTube’s video platform—so expecting a direct ChatGPT rival misses the point. Bloomberg reported that Apple’s contextual Siri, powered by app intents, is already functional, not “demo ware,” as Joswiak sharply rebuked speculation to the contrary.

Apple’s strategy hinges on long-term refinement. While competitors race to scale large language models, often at environmental cost, Apple focuses on efficient, user-centric tools. Developers can tap Apple’s Foundation Models framework this fall, enabling on-device AI without siphoning user data to third parties, unlike some cloud-based solutions.

What’s Next for Apple Intelligence

Siri’s evolution is a key focus. Rumors of a large language model backend persist, but Apple’s current app-intent system already enables contextual responses, like pulling data from apps for smarter replies. The Wall Street Journal noted that Federighi showcased a working version at WWDC, suggesting Siri’s upgrades are more advanced than public perception. Rather than a standalone AI app, Apple aims to make its ecosystem a proactive, personalized backend across devices.

For users, this means practical benefits: Visual Intelligence streamlines tasks, like searching for items in a screenshot via Google or Etsy, while on-device processing keeps data secure. The Games app, Photos enhancements, and iPadOS multitasking all leverage Apple Intelligence for subtle but impactful improvements. As developers adopt the framework, expect richer app integrations by fall 2025.

Apple’s AI race isn’t about raw power—it’s about relevance. By embedding intelligence into daily workflows, Apple delivers tools that feel intuitive, not intrusive. The privacy-first, on-device approach sets it apart from cloud-dependent competitors, appealing to users wary of data leaks. While Image Playground may underwhelm now, Apple’s history of refining features suggests it’s playing the long game. For tech enthusiasts and casual users alike, Apple Intelligence promises a more seamless iPhone and iPad experience without chasing the chatbot hype.