The idea of data centers in orbit has moved from speculative fiction into serious discussion among aerospace firms, cloud providers, and artificial intelligence researchers. As AI workloads continue to grow and terrestrial data center expansion runs into energy, land, and regulatory constraints, space-based computing is increasingly framed as a potential way to bypass Earth-bound limitations. Proposals often reference the late 2020s as an inflection point, but behind the optimism sits a more complex reality shaped by physics, economics, and infrastructure limits.

Rather than positioning orbital data centers as replacements for terrestrial infrastructure, most current discussions describe them as experimental extensions designed for narrow use cases. The distinction matters, because the challenges of operating compute infrastructure in space are fundamentally different from scaling data centers on Earth.

Why Data Centers in Orbit Entered the Conversation

The renewed interest in data centers in orbit reflects mounting pressure on the global computing ecosystem. AI training and inference require dense clusters of accelerators, driving unprecedented demand for electricity, cooling, and physical space. In several regions, power grid capacity and environmental permitting have slowed or blocked new data center construction, creating bottlenecks for cloud expansion.

Space offers a theoretical escape from some of these constraints. Continuous solar exposure in orbit promises steady power generation without weather or day-night cycles. Proponents also point to the absence of atmospheric conditions, suggesting alternative cooling approaches that avoid water-intensive systems used on Earth.

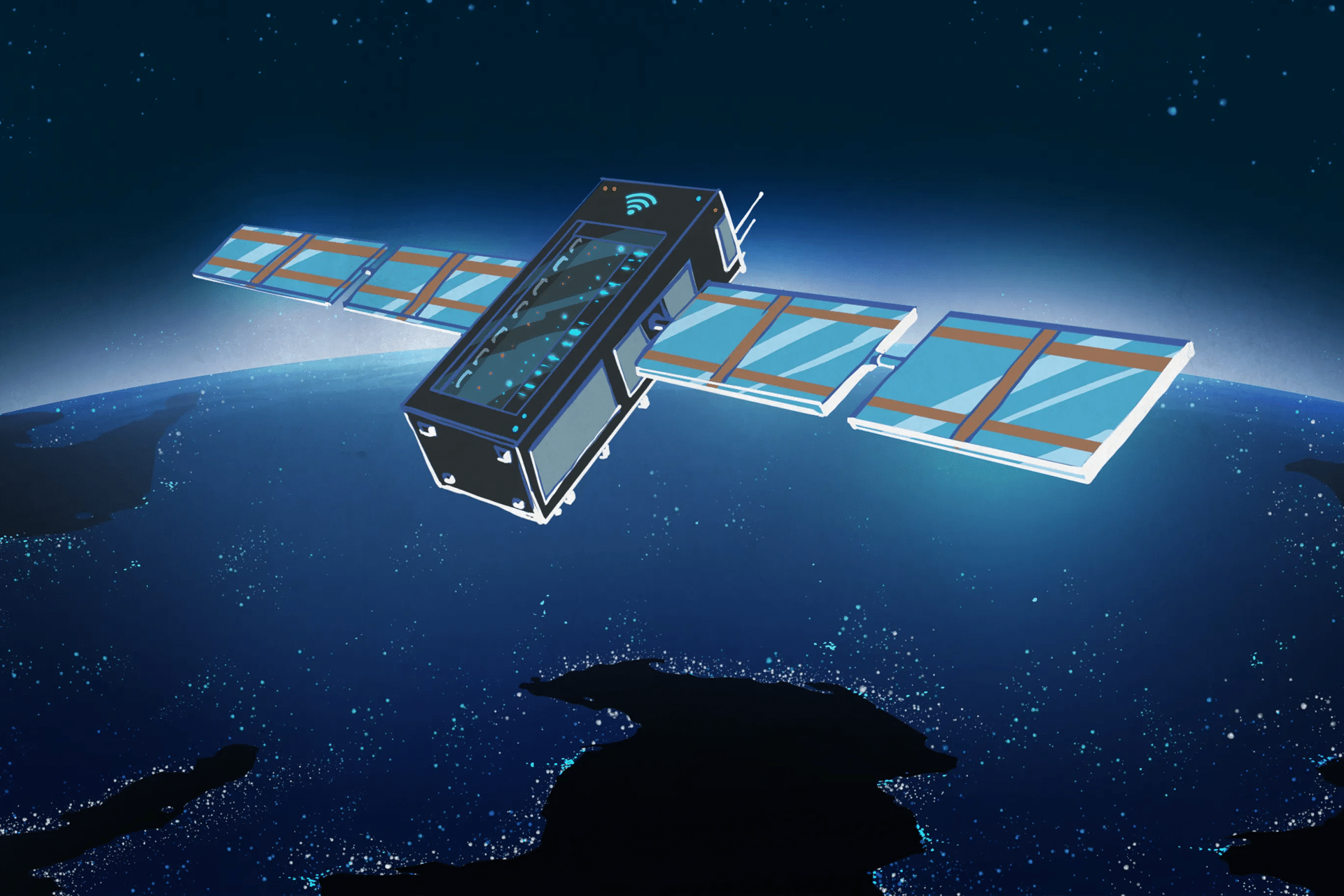

Another factor is proximity to space-based assets. Satellites, Earth observation systems, and scientific instruments generate large volumes of data. Processing information in orbit rather than transmitting everything back to Earth could reduce bandwidth demands and latency for specific applications. For these scenarios, orbital computing is framed as an efficiency improvement rather than a capacity expansion.

These arguments gained momentum alongside declining launch costs. Reusable rockets and higher launch cadence have lowered the barrier to placing experimental hardware in orbit, making test deployments more feasible than in previous decades.

The Reality of Engineering Constraints

Despite the conceptual appeal, data centers in orbit face engineering challenges that offset many proposed advantages. Hardware designed for terrestrial environments is not suited to radiation exposure, extreme temperature variation, or long-term operation without physical maintenance. Space-qualified components are more expensive, less modular, and often trail cutting-edge commercial silicon in performance and efficiency.

Cooling remains a central issue. While space eliminates air and water cooling, heat still must be dissipated. Radiative cooling requires large surface areas, adding mass and structural complexity. Managing heat from high-density AI accelerators in orbit would demand designs that have not yet been demonstrated at scale.

Maintenance and reliability further complicate deployment. Terrestrial data centers rely on frequent hardware replacement and incremental upgrades to maintain uptime. In orbit, component failure can mean permanent loss unless systems are designed for autonomous repair or modular replacement. Servicing missions increase cost and depend on advances in on-orbit robotics and assembly that are still emerging.

Radiation shielding adds another layer of complexity. Protecting sensitive electronics increases mass, directly affecting launch costs and limiting the amount of compute hardware that can be economically deployed.

Economic and Network Limitations

The economics of data centers in orbit remain uncertain. Even with reduced launch costs, placing large volumes of compute hardware into space is significantly more expensive than building on Earth. Power generation systems, thermal management, radiation protection, and communication links all add to the cost structure.

Bandwidth constraints also limit viable workloads. AI systems often require moving massive datasets between storage and compute nodes. While satellite communications continue to improve, transmitting large volumes of data between Earth and orbit remains costly and introduces latency. This restricts space-based computing to workloads where data originates in space or where limited data transfer is acceptable.

As a result, most realistic use cases involve specialized processing rather than general-purpose cloud computing. These include onboard analysis for satellites, real-time monitoring for scientific instruments, and certain defense or communications tasks where immediate local processing outweighs infrastructure costs.

What the Late-20s Timeline Really Means

References to the late 2020s as a milestone for data centers in orbit should be interpreted as checkpoints for experimentation rather than deadlines for deployment. Publicly discussed timelines often reflect conceptual targets, not confirmed launch schedules for large-scale facilities.

More plausible within that timeframe are small, purpose-built compute platforms integrated into satellite systems. These would function as distributed nodes rather than centralized data centers, supporting limited AI inference or data processing close to the source.

Such platforms would not resemble terrestrial hyperscale facilities. Instead, they would complement Earth-based infrastructure, reducing data transmission requirements and enabling faster decision-making for specific applications.

Most AI training and large-scale inference will continue to take place on Earth, where economies of scale, mature supply chains, and established networks remain decisive advantages.

Longer-Term Implications

While large orbital data centers remain speculative, the discussion highlights a broader convergence between space infrastructure and digital computing. Satellites are becoming more autonomous, with onboard processing enabling real-time analysis and reduced dependence on ground stations. This mirrors trends in edge computing across terrestrial networks.

Future advances in launch systems, in-orbit manufacturing, and autonomous maintenance could gradually reduce today’s barriers. Even then, space-based computing is likely to remain focused on applications where proximity to orbital assets offers clear benefits.

Environmental and regulatory considerations further shape the debate. Moving compute infrastructure into orbit shifts concerns rather than eliminating them, raising questions about orbital debris, launch emissions, and long-term sustainability. Governance issues around data sovereignty, security, and international coordination add additional layers of complexity.

The interest in data centers in orbit ultimately reflects the scale of demand generated by modern AI rather than a near-term solution to it. Space is being explored because terrestrial systems are under strain, not because orbital computing is inherently simpler or cheaper. For now, space-based data centers remain experimental, specialized, and constrained by realities that extend well beyond optimistic timelines.