For years, Siri has been present everywhere in the Apple ecosystem, yet rarely felt central. It could answer questions, set timers, and control devices, but conversations felt fragmented and short-lived. Each request stood alone, disconnected from what came before.

The announced integration of Google’s Gemini models into Apple’s AI foundation suggests that this limitation may finally be addressed. What’s emerging is not just a smarter Siri, but a more coherent voice for the entire Apple ecosystem.

From Commands to Conversations

The most meaningful change expected from Gemini Siri is continuity. Instead of treating each request as a reset, Siri is positioned to remember context within a conversation.

Ask about a meeting, follow up with a scheduling change, then request notes from that discussion. The assistant understands the thread, not just the trigger words. This shift allows interactions to feel closer to human dialogue, where meaning accumulates rather than disappears.

A more conversational tone also matters. Responses are expected to be quicker, more direct, and less robotic, reducing the friction that once discouraged longer exchanges.

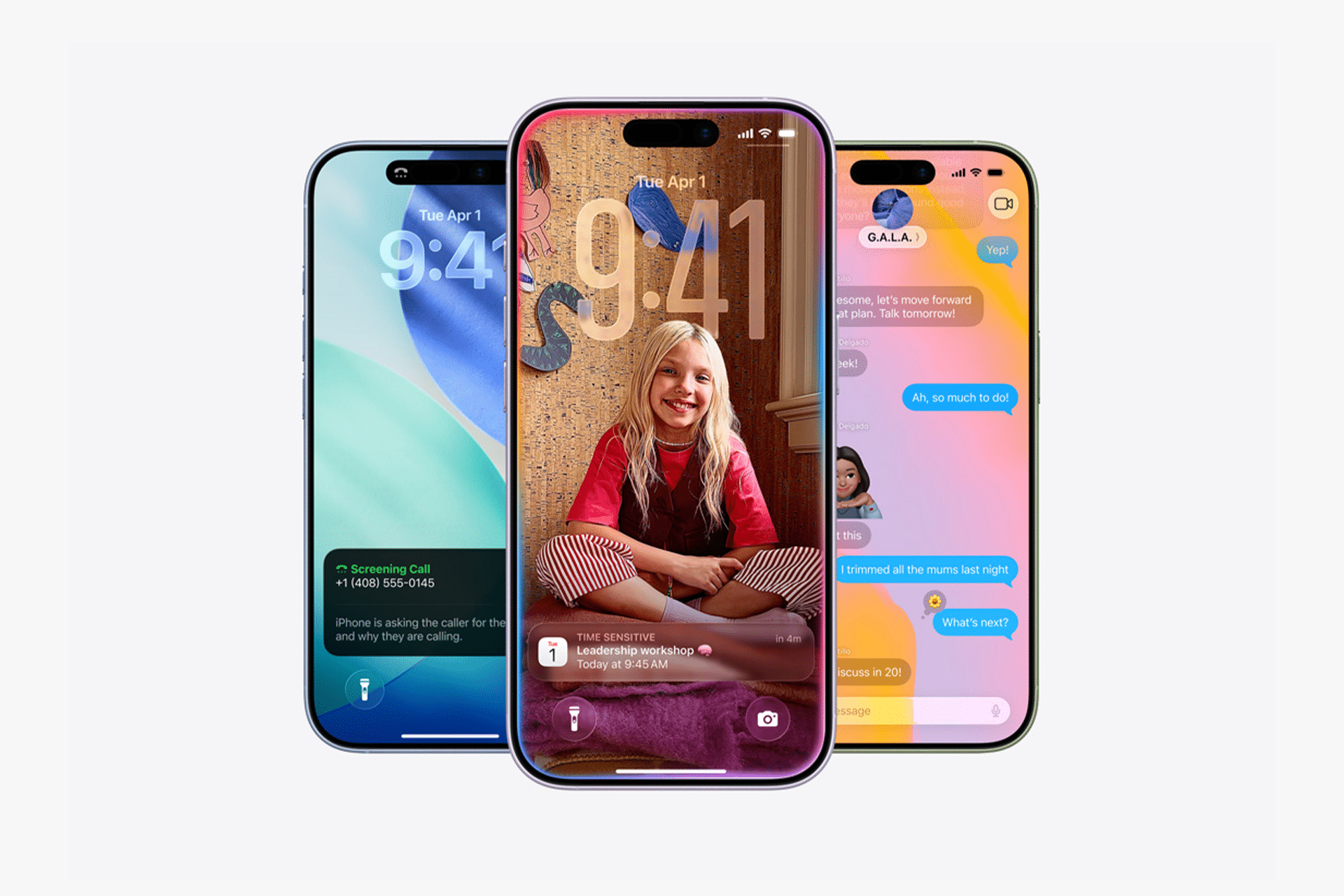

Context That Spans Devices

Apple’s strength has always been ecosystem integration, and Gemini Siri appears designed to express that integration through voice.

A reminder set on iPhone connects naturally to Calendar on Mac. A note dictated on Apple Watch resurfaces when working on iPad. Home requests link lights, temperature, and media without repeated clarification.

Siri becomes less of a feature and more of a connective layer, aware of what you’re doing, where you are, and what you’ve already said, while still respecting Apple’s privacy boundaries.

Memory as a Feature, Not a Risk

One of the most anticipated aspects of Gemini Siri is short-term conversational memory. The ability to remember preferences, ongoing tasks, and recent context transforms Siri into a genuine assistant rather than a reactive tool.

That memory is expected to be selective and intentional. Apple’s approach emphasizes on-device processing and Private Cloud Compute, ensuring personal context remains under user control instead of becoming a persistent profile stored elsewhere.

This balance between memory and privacy defines how far Siri can evolve without losing trust.

A Voice That Matches Daily Life

Siri’s role has expanded beyond utilities. It touches work, home, and personal organization. With Gemini-level language understanding, Siri can bridge these areas more fluidly.

Managing schedules, drafting notes, summarizing messages, preparing reminders, and coordinating household routines become part of a single conversational flow. The assistant doesn’t feel like it switches modes. It adapts naturally as the day unfolds.

This is where Siri can finally express the soul of the Apple ecosystem, not through features, but through presence.

Why This Moment Matters

Apple has invested heavily in intelligence, but often quietly. The Gemini Siri integration represents a visible turning point, where that investment becomes tangible in everyday interactions.

Rather than competing on novelty, Apple appears focused on reliability, tone, and usefulness. Siri doesn’t need to be louder or more expressive. It needs to be more aware, more consistent, and more human in how it supports tasks.

If executed well, Gemini Siri could change how users relate to their devices, turning voice from a convenience into a primary interface.

A Natural Interface at Last

The promise of voice assistants has always been natural interaction. With Gemini powering deeper understanding and Apple providing ecosystem-level integration, that promise feels closer than ever.

Siri may finally move beyond being a tool you talk to, becoming a presence that listens, remembers, and helps without breaking flow. In doing so, Apple’s ecosystem gains not just intelligence, but a voice that feels like it belongs everywhere it speaks.