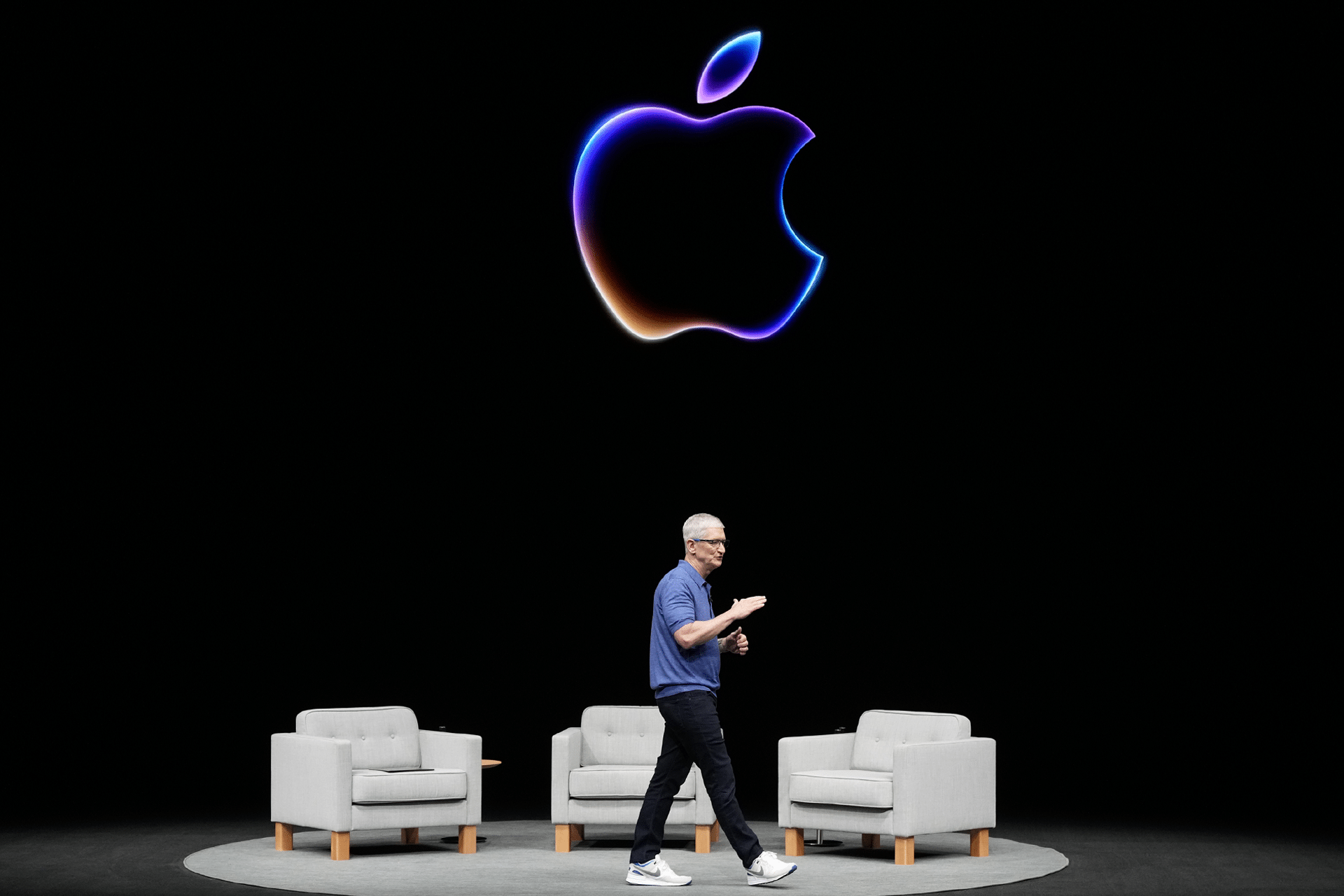

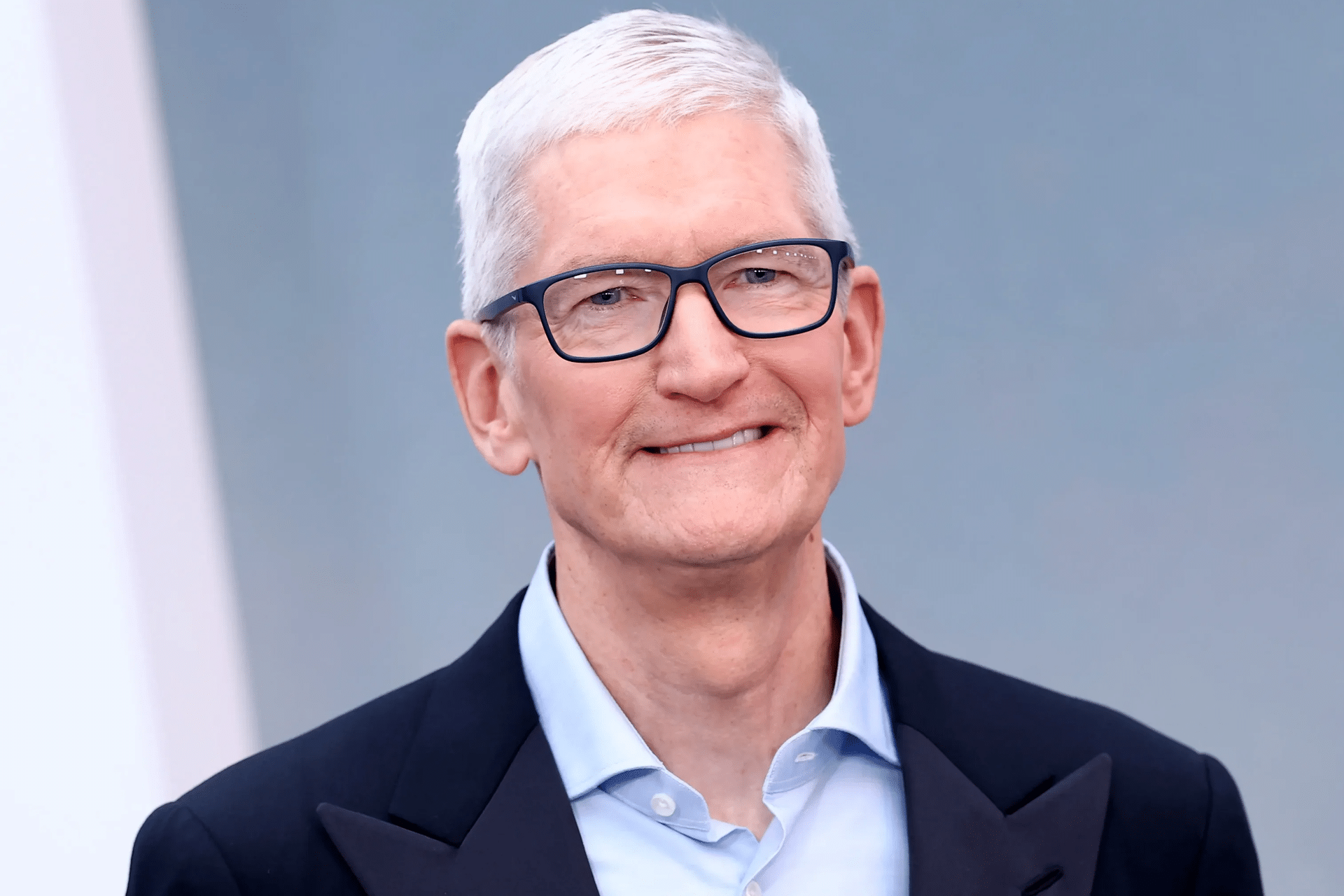

Tim Cook shared details on Apple’s AI development in a new interview. He described the transition from initial AI explorations to the current Apple Intelligence system integrated in iOS 26 and macOS Ventura.

Cook explained that Apple began serious AI work around 2017, building teams and acquiring startups focused on machine learning. Early efforts centered on improving Siri and camera processing in iPhones. The company prioritized on-device computation to keep data local, avoiding cloud dependencies used by competitors.

Apple Intelligence launched with iOS 26 in September 2025, bringing tools like Writing Tools for text refinement and Image Playground for generating visuals. These run primarily on the device using the A19 chip in newer iPhones and M5 processors in Macs. For complex tasks, Private Cloud Compute routes requests to dedicated servers without storing user data.

AI Development Timeline

Apple’s AI investments grew steadily. By 2020, the company employed thousands in machine learning roles. Acquisitions included Xnor.ai for edge AI and Voysis for voice recognition. Cook noted that privacy requirements shaped every decision, leading to rejection of models needing constant cloud access.

Siri received updates first, gaining contextual awareness in iOS 25. Apple Intelligence expanded this with natural language improvements and app integrations. Users can now ask Siri to edit photos or summarize emails directly in Mail. The system supports English initially, with more languages planned for 2026.

On-device processing handles most requests. The iPhone 17 Pro uses its neural engine for tasks like object removal in Photos. When server help is needed, data transmits encrypted and deletes after processing. Apple publishes the server code for verification.

Privacy and Security Measures

Cook emphasized that Apple Intelligence avoids training on user data. Models use licensed content and synthetic datasets. Private Cloud Compute operates in secure facilities, with independent audits confirming no data retention.

For iPhone users, this means features like Genmoji creation stay local. The system checks device capabilities before enabling tools—older models access basic functions via cloud with user consent. Beta testers report smooth performance in everyday apps like Notes and Messages.

Future updates include deeper app integrations. Developers gain access through App Intents, allowing custom actions. Vision Pro supports spatial AI for object placement in environments.

Cook views Apple Intelligence as an extension of existing features rather than a separate product. It works across iPhone, iPad, and Mac with Continuity for seamless handoffs.

See Apple’s overview at apple.com/apple-intelligence. Check our iOS 26 guide at Apple Magazine.