Apple is tightening its App Store policies around how developers can collect and share personal data with AI systems, introducing new rules designed to limit how user information is transferred to external machine-learning models. The update, reported by CNET, comes as Apple prepares a wider rollout of Apple Intelligence features while balancing regulatory pressure and growing scrutiny of data practices across the tech industry.

The new rules apply to all developers submitting or updating apps in the App Store and outline how personal data — including location, contacts, device identifiers and behavioral analytics — may be used when interacting with third-party AI platforms. The guidelines state that developers must disclose any AI-related data sharing, obtain explicit user consent and avoid sending sensitive information to external services unless absolutely necessary for the app’s core function.

Apple’s Privacy Framework and AI Integration

The policy change aligns with Apple’s long-standing privacy strategy, where user control and data minimization are central to platform design. As AI tools become embedded across more apps, Apple is drawing a clearer boundary between what stays on device and what can be transmitted to cloud-based models.

According to CNET’s reporting, apps that rely on third-party AI models — such as generative text tools, translation engines or image-processing models — will need to demonstrate clear user awareness and limit the type of personal information shared. Apple warns developers that sending excessive data to external AI endpoints could violate App Store Review Guidelines, potentially blocking app approval.

Developers are also required to specify whether data shared with AI models will be stored, used to train future models or shared with additional parties. These disclosures must appear in the app’s privacy label, a requirement that Apple has been expanding over the past several years to increase transparency.

The update arrives as AI adoption accelerates across the software industry. Many developers have integrated external AI APIs to enable chatbots, automation tools and creative features. Apple’s new rules underline that these integrations must not compromise the privacy expectations the platform promotes.

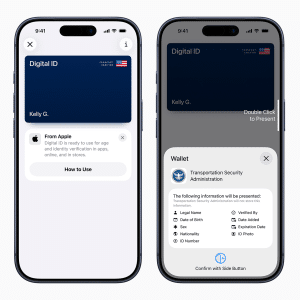

Apple Intelligence — the company’s device-driven AI system — processes the majority of tasks locally using Apple Silicon. For cloud-based operations Apple uses Private Cloud Compute, a system designed to minimize the exposure of user data. The new App Store rules extend that philosophy to the broader developer ecosystem, ensuring that third-party AI workflows follow similar standards.

Developer Impact

Developers relying on remote AI services may need to revise their data-handling workflows to pass App Store review. For many, this could include reducing the volume of data sent to servers, stripping identifying metadata or offering AI functionality only after explicit opt-in from the user.

Some developers are expected to shift more processing on-device where possible, particularly as iPhones and iPads continue to ship with stronger neural processing capacity. This mirrors Apple’s broader direction of encouraging privacy-first computation rather than broad cloud-based data transfer.

Implications for AI Regulation

Apple’s move follows a global pattern where regulators and platform operators are paying closer attention to how AI systems manage personal information. The European Union, for instance, has been advancing compliance requirements through the EU AI Act, and U.S. agencies have increased oversight of data transfer practices involving AI tools.

By implementing these rules now, Apple is positioning the App Store ahead of emerging regulatory frameworks. Developers who adapt to these standards early may benefit from greater long-term stability as other platforms introduce similar constraints.

The new guidelines reflect a moment where the rapid growth of AI features intersects with established privacy norms. Apple’s update sets clear expectations for developers building AI-enabled apps, reinforcing that personal data must be handled with care as the next wave of AI tools becomes mainstream.